AIRFLOW KUBERNETES EXECUTOR POD TEMPLATE HOW TO

How to use privateīy default, the ~.operators.kubernetes_pod.KubernetesPodOperator The YAML file can stillīe provided with the pod_template_file or even the Pod SpecĬonstructed in Python via the full_pod_spec parameter which Object spec for the Pod you would like to run. Using the operator, there is no need to create the equivalent YAML/JSON That is able to be run in the Airflow scheduler in the DAG context.

/./tests/system/providers/cncf/kubernetes/example_kubernetes.py Differenceīetween KubernetesPodOperator and Kubernetes objectĬan be considered a substitute for a Kubernetes object spec definition Recommend that you import the Kubernetes model API like this: To add ConfigMaps, Volumes, and other Kubernetes native objects, we To use cluster ConfigMaps, Secrets, and Volumes with Pod? Random suffix is added by default so the pod name is not generally of Task is already running in kubernetes) and failing that we'll use theįor pod name, if not provided explicitly, we'll use the task_id. These methods, then we'll first try to get the current namespace (if the That means for each task in a dag, there is a separated pod generated in the Kubernetes cluster.

AIRFLOW KUBERNETES EXECUTOR POD TEMPLATE FULL

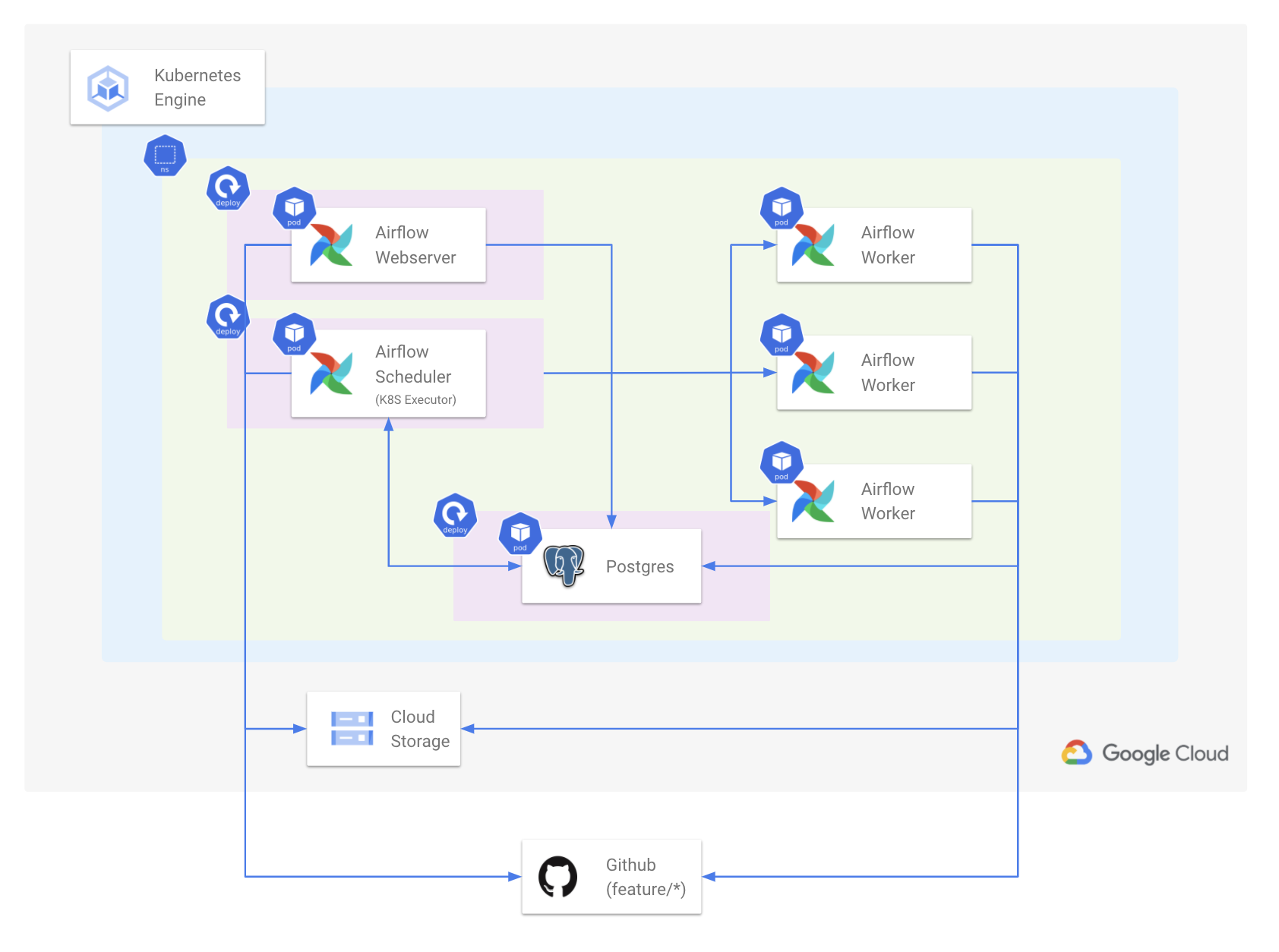

Of precedence is KPO argument > full pod spec > pod template fileįor namespace, if namespace is not provided via any of The Kubernetes executor runs each task instance in its own pod on a Kubernetes cluster. Params, pod spec, template and airflow connection. When building the pod object, there may be overlap between KPO You can print out the Kubernetes manifest for the pod that would beĬreated at runtime by calling ~.KubernetesPodOperator.dry_run on an instance of theįrom .operators.kubernetes_pod import KubernetesPodOperator k = KubernetesPodOperator( name = "hello-dry-run", image = "debian", cmds =, arguments =, labels =, task_id = "dry_run_demo", do_xcom_push = True, ) k.dry_run() Argument precedence Ultimately, it allowsĪirflow to act a job orchestrator - no matter the language those jobs It also allows users to supply a template YAML file using Python dependencies that are not available through the public PyPI

Otherwise the operator will default to ~/.kube/config.Įnables task-level resource configuration and is optimal for custom Specify a kubeconfig file using the config_file parameter, Request that dynamically launches those individual pods. Operator uses the Kube Python Client to generate a Kubernetes API Supplying an image URL and a command with optional arguments, the Uses the Kubernetes API to launch a pod in a Kubernetes cluster. The ~.operators.kubernetes_pod.KubernetesPodOperator

0 kommentar(er)

0 kommentar(er)